If your underlying filesystem/devices have different response times (e.g. some devices are cached – or are on SSD) and others are on spinning disk, then the behavior of fio can be quite different depending on how the fio config file is specified. Typically there are two approaches

1) Have a single “job” that has multiple devices

2) Make each device a “job”

With a single job, the iodepth parameter will be the total iodepth for the job (not per device) . If multiple jobs are used (with one device per job) then the iodepth value is per device.

Option 1 (a single job) results in [roughly] equal IO across disks regardless of response time. This is like having a volume manager or RAID device, in that the overall oprate is limited by the slowest device.

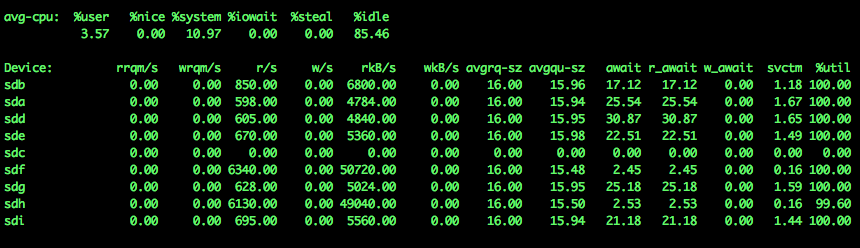

For example, notice that even though the wait/response times are quite uneven (ranging from 0.8 ms to 1.5ms) the r/s rate is quite even. You will notice though the that queue size is very variable so as to achieve similar throughput in the face of uneven response times.

To get this sort of behavior use the following fio syntax. We allow fio to use up to 128 outstanding IO’s to distribute amongst the 8 “files” or in this case “devices”. In order to maintain the maximum throughput for the overall job, the devices with slower response times have more outstanding IO’s than the devices with faster response times.

[global] bs=8k iodepth=128 direct=1 ioengine=libaio randrepeat=0 group_reporting time_based runtime=60 filesize=6G [job1] rw=randread filename=/dev/sdb:/dev/sda:/dev/sdd:/dev/sde:/dev/sdf:/dev/sdg:/dev/sdh:/dev/sdi name=random-read

The second option, give an uneven throughput because each device is linked to a separate job, and so is completely independent. The iodepth parameter is specific to each device, so every device has 16 outstanding IO’s. The throughput (r/s) is directly tied to the response time of the specific device that it is working on. So response times that are 10x faster generate throughput that is 10x faster. For simulating real workloads this is probably not what you want.

For instance when sizing workingset and cache, the disks that have better throughput may dominate the cache.

[global] bs=8k iodepth=16 direct=1 ioengine=libaio randrepeat=0 group_reporting time_based runtime=60 filesize=2G [job1] rw=randread filename=/dev/sdb name=raw=random-read [job2] rw=randread filename=/dev/sda name=raw=random-read [job3] rw=randread filename=/dev/sdd name=raw=random-read [job4] rw=randread filename=/dev/sde name=raw=random-read [job5] rw=randread filename=/dev/sdf name=raw=random-read [job6] rw=randread filename=/dev/sdg name=raw=random-read [job7] rw=randread filename=/dev/sdh name=raw=random-read [job8] rw=randread filename=/dev/sdi name=raw=random-read