TL;DR

If your storage system implements inline compression, performance results with small IO size random writes with time_based and runtime may be inflated with fio versions < 3.3 due to fio generating unexpectedly compressible data when using fio’s default data pattern. Although unintuitive, performance can often be increased by enabling compression especially if the bottleneck is on the storage media, replication or a combination of both.

Therefore if you are comparing performance results generated using fio version < 3.3 and fio >=3.3 the random write performance on the same storage platform my appear reduced with more recent fio versions.

fio-3.3 was released in December 2017 but older fio versions are still in use particularly on distributions with long term (LTS) support. For instance Ubuntu 16, which is supported until 2026 ships with fio-2.2.10

What causes reduced write performance from fio-3.3 onwards?

The default data pattern generation in fio is what’s known as scramble_buffers – the trade off being made is that it requires more CPU cycles to generate truly random data for every IO – so lightly scrambling the buffers is usually enough to avoid simple/naive compression and dedupe.

What I found is that prior to fio-3.3 scrambling is not working as intended with small IO sizes written randomly and using the time_based and runtime parameters – which is a common technique used in storage performance testing. However, large-sequential writes are working as intended.

Scramble buffers with sequential/large IO sizes (all fio versions)

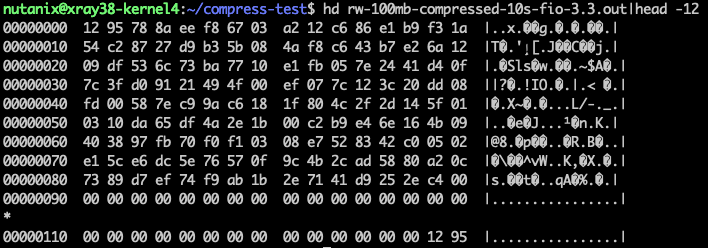

Here is what the data pattern looks like for a file written by fio with default/scramble data pattern using 1MB IO size sequentially. Although there are some repeating patterns and NULL bytes a 100MB file compresses to 86MB using gzip so the data is 14% compressible.

fio data dump

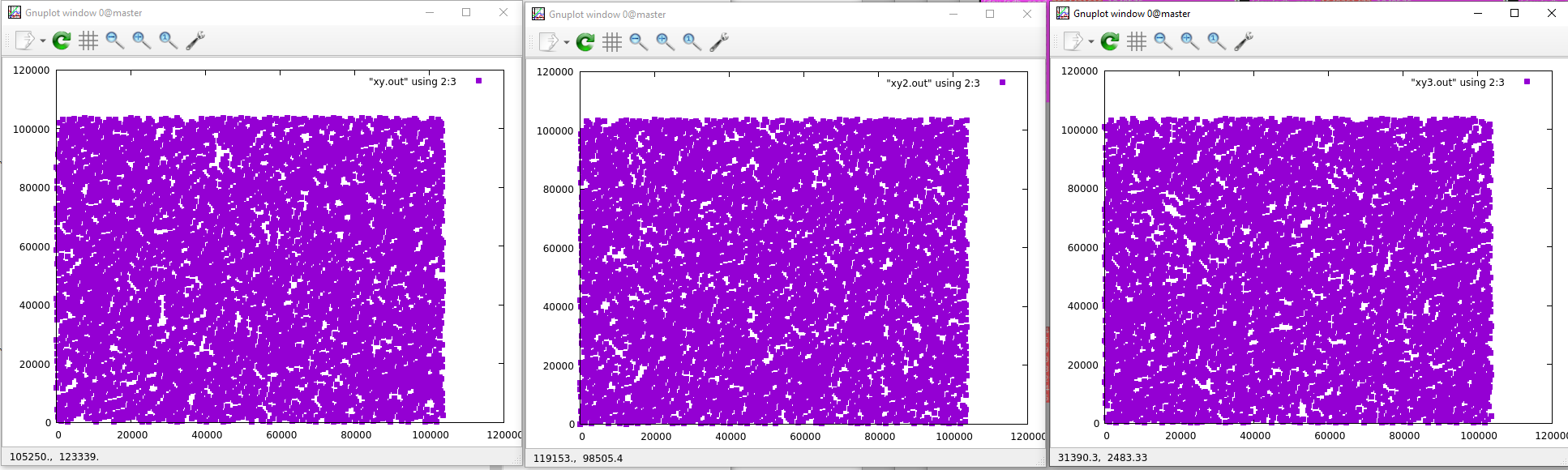

Scramble buffers with small/random IO sizes (prior to 3.3)

Prior to 3.3 when we ask fio to generate small/random data using time_based and runtime using the default scamble buffers algorithm there are a large number of NULL bytes in the output. a 100MB file compresses to 28MB using gzip. Compared to the sequential write this is about 4x more compressible than the sequentially written data.

Scramble buffers with small/random IO sizes fio 3.3

From fio 3.3 onwards the data that fio generates with small/random IO patterns with time_based and runtime looks more like the pattern generated with large/sequential writes. There are few NULLs and a 100MB file compresses to 84MB using gzip. Compared to the sequential write it’s about the same degree of compressability. With the largely uncompressible data we get no boost from the inline compression and so performance results may be lower.

Returning random write performance to pre fio 3.3 levels

fio allows the user to deliberately compress data using the fio parameter

buffer_compress_percentage

Using this parameter set to 70 (to simulate 100MB -> ~30MB compression of pre fio 3.3 versions) I was able to get back the “lost” performance using this parameter but the way fio creates compressible data is a bit odd – it seems to add a lot of NULL bytes at the end of the block, rather than interspersed with the other data.

fio scripts

Large sequential fio script

[global]

bs=1m

rw=write

direct=1

ioengine=libaio

iodepth=32

[sw-once]

filename=${FIOFILENAME}

size=100mbSmall random timed fio script

[global]

time_based

runtime=10s

bs=8k

rw=randwrite

direct=1

ioengine=libaio

iodepth=32

[rw-timed]

filename=${FIOFILENAME}

size=100mb